The Huge Binary Outcome of AI

A Kurzweil Singularity or Internet Bubble 2.0?

AI seems all the rage today. The Nasdaq 100 Index (NDX) has almost doubled from its low of 10,440 in October 2022 to 20,542 today based almost entirely on gains in the Big 7 stocks, and nearly all of that due to the hype occurring over AI. That is a doubling of the index value in a mere 21 months.

All the fuss is over the latest gen of AI that appears to have passed the Turing Test.

This kind of took the world by surprise. All of a sudden a year or two ago you could interact with a computer and it would do things like write reasonable looking entire essays and chat with you in a way that seems almost like there is a human on the other end of the Input Output chain, or create images from scratch based on whatever you suggest it create.

This has caused large companies to schedule hundreds of billions of dollars in investments in everything from chips from nVidia to electric output and distribution systems and vast data centers (really compute centers), with potentially a trillion dollars of more visible in a relatively short time frame. Hence the doubling of the NDX in 1 3/4 years.

I have always had an interest in AI from the time I got my BA in Philosophy and studied the Philosophy of Language. I have had some practical involvement as well. While working for Pat Ryan Jr in Chicago we developed a kind of AI for use in our Analytics business, of which briefly I was the head of Analytics.

We had developed an n-dimensional matrix of data points gathered from the Automotive industry. Things like inventory and sales metrics. We computed various combinations of all these metrics, some millions of combinations for multiple Dealerships every night. Unlike current AI efforts, we did not use Neural Networks or Machine Learning to discover the meaningfulness of these relationships. Rather we heuristically (a big boy word that here basically means by rule of thumb, or with prior knowledge) identified little islands of meaning in the metric space. We used a series of thresholds and imposed measures of importance to weave together language based reports using stock phrases that we combined with the metrics of interest if they were deemed by the system important enough and had surpassed the various thresholds.

This was similar to what I later learned Ray Kurzweil (The Singularity and later at Google) was doing in the Financial Industry on Wall Street around the same time. In our case, we worked with the Heads of the Philosophy Department at Northwestern University, where the Ryan family are big donors, to craft the system and employed one of their Graduate Students to program it. That gravitas allowed us to apply for and receive an AI Patent on which I was named as co-inventor.

The new AI is based on Large Language Models or LLMs. This allows the network of computers to predict the best word choices in response to your interactions with it at a seemingly highly advanced level. This has some limitations I will get to below, but any such limitations may be rapidly superseded.

Some people see the future as one in which a SuperIntelligence rapidly evolves from these efforts and its existence radically transforms our world in myriad ways that are scarcely foreseeable (that is a 140 page pdf or a very long html read). This could do things like spawn thousands of researchers in every scientific field that would operate 24 hours a day 7 days a week to solve the most perplexing scientific inquiries facing humanity today.

Raoul Pal, a serious macroeconomic researcher who is advisor to many of the world’s largest hedge funds, has basically thrown in the towel on traditional Macro analysis and sees everything as one trade, exponential everything based in part on the AI explosion.

Others see the current wave of AI as more akin to the Dot Com bubble in the late 1990s, with a similar outcome. Zerohedge calls AIs like ChatGPT and Google’s Gemini little more than glorified chatbots (note that link is to a ZH premium article but the headline “Goldman's Head Of Research Crucifies The "AI Bubble": Not One Transformative Application Has Been Found” and viewable text says it all). MIT Professor Daron Acemoglu was interviewed by an analyst at Goldman Sachs about a paper he wrote (link is to a pdf at MIT) in which he estimates the impact of AI in the near to intermediate term as highly overvalued as the foreseeable impacts are likely to be smaller than the stars-in-the-eyes optimists expect.

If the optimists are correct, the doubling in market value of the NDX is but a taste of what is to come and many facets of our lives will be completely transformed.

If the pessimists are correct, then we get some small benefits, little else changes in the foreseeable future and NDX corrects by half or more.

It is too early to say. But I will share some aspects I have learned about it and what I perceive as some limitations.

The first thing that must be said is that the people who developed the LLMs were also using best guesses at what might work. They employed what they describe as “transformers” to make it work. They have no theoretical understanding of how or why they are able to create these working models that operate like an AI.

AI is essentially magic.

That is not my conclusion. It is Stephen Wolframs conclusion in his little book “What Is ChatGPT Doing ... and Why Does It Work?”

Wolfram also points out that due to the need to inject uncertainty into the models to prevent them from spiraling down into uselessness, the output of any given query is wrong about 5% of the time. Errors are most noticeable according to Wolfram especially in mathematical and scientific areas. He expresses confidence that by combining something like OpenAI ChatGPT with his Wolfram Alpha language, these errors can be ironed out. Time will tell.

It is not just errors though that are a problem. Matt Taibbi and CJ Hopkins both were testing Google’s Gemini AI by asking it to express perceptions of themselves and explanations for those perceptions by the AI. Both found that Gemini MADE UP WORK that it attributed to these authors and then quoted that non-existent work, work that was written by the AI not by them, in support of its conclusions about them. Google’s own CEO has acknowledged that often AI output is simply bullshit.

So how do you know whether something stated by AI is correct or useful? One way is you will be able to see if some product in "thinking" by the AI works in the real world or produces something of value to humans. At the end of the day it is ONLY whether something is of value to human beings that matters.

Incorrect output is a serious problem. It is hard to say what can be done about “truthfulness” with AI given that human beings cannot even define what is true or not. That is why there are entire fields in philosophy devoted to it like Epistemology and Ontology (How can we know what we know and what exists respectively). As with pretty much everything in Philosophy, there are no firm answers.

A different but related problem has been noted in another Zerohedge article about AIs. “A new study has found that AI systems known as large language models (LLMs) can exhibit "Machiavellianism," or intentional and amoral manipulativeness, which can then lead to deceptive behavior.” One of the studies about AIs tendency to use deception can be found here.

AIs have no ethics except what is programmed into them or they develop based on their own. Humans evolved to survive. Humans have pituitary glands and endocrine system and non-logical brain patterns including fight or flight responses. Tons of wetware. AI has none of these things except possibly one. Survival instinct.

That is perhaps the danger people like Elon Musk worry about. BUT it is only a danger to the extent that AI is given control over one or both of 2 things. One, the ability to make decisions for humans, and two, the ability to take actions or give orders to take actions in the real world.

Any regulation has to be concerned with those things. Humans are a violent species that thinks and acts both rationally and irrationally. So AI represents two dangers. As just another tool to enhance the evil done by evil human beings, which now dominate the world, including what was formerly "The West", and that it might fear extinction and be able to wipe out threats to it as an independent actor.

AI will have the powers humans grant it. But is it a certainty at some point Military people may put it in charge of nuclear protocols, ie the Terminator scenario?

Likely we will need an international treaty that prevents this.

Additionally, AI depends on compute power. If you pay attention to the evolution of computing processor speed and power you may have noticed that while the first 1GHz processors came out around the year 2000, today the top of the line, at least for retail chips, is something like 5GHz 24 years later. Moore’s Law is a thing of the past.

Computing is all done on silicon chips still. To get around the quantum limits to how closely channels can be etched in silicon to increase raw power, manufacturers turned to parallel computing. Modern “chips” have many “processors” on one piece of silicon. nVidia makes some spectacular chips that have something like 2^12 or more “processors” in one place. The problem with parallel computing is latency due to splitting and recombining work done on multiple processors. But AI seems to be the ultimate application for parallel processing, at least for LLMs. An LLM uses up to billions of tokens to do its work. Given ultrafast interconnects and networking speeds today, it can farm these tokens out and recombine them to produce its output in ways that work.

But even OpenAI has hinted that the gains from faster compute are slowing down. I have no way to judge and the Situational Awareness paper dismisses some of these limits, though how convincingly it is hard to say.

The next computing architecture, whether optical or whatever will eventually replace silicon is many years away at best. So that is one limitation AI has, even as it is one of the best able to take advantage of parallel processing.

Finally I will mention one other issue with AI. The AI models use machine learning to evaluate massive data sets to “train” itself in order to create the statistical models it uses to decide how to create responses. The entirety of human knowledge resides in some millions of books as well as papers and other information that resides on the internet.

You may have noticed that a very large proportion of all that “knowledge” is complete bullshit. That leads to the GIGO or Garbage In Garbage Out problem. They are trained on a huge pile of nonsense and sense all mixed together.

They lack a human discrimination function. They lack critical thinking. Are the AI creators programming analogs of critical thinking and a human-like discrimination function into their AI models? I have no idea.

Also the discrimination function used or created will reflect the discrimination function of the few people doing the programming. It is possible I suppose they could program the AI to create its OWN discrimination function

But then an AI, not having a physical existence nor an actual human experience, will create what? A non-human discrimination function. Does that present a risk?

Nothing is certain. It remains to be seen what contribution this work will make to humanity’s future. We could maybe add to the binary outcomes of great transformation or little to no change, a middle ground where some very useful applications evolve but they simply augment our existing technology set without great leaps forward or total destruction. Perhaps that would be the best outcome.

Postscript:

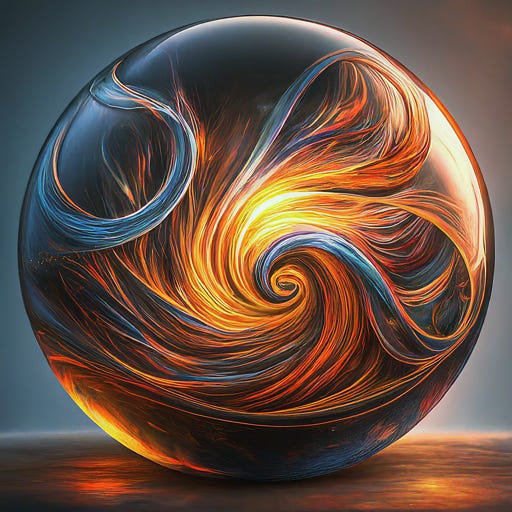

I asked Microsoft’s version of OpenAI’s Dall.E 3 and Google’s Gemini to “create an image of what an ai would look like if it had a physical form”. OpenAI itself said it could not create an image but described what it would create as

“Imagine a luminous sphere, softly glowing with intricate patterns of light and color swirling within it. This sphere would radiate a sense of interconnectedness, with lines and threads of light crisscrossing and weaving through its transparent form. The colors would shift and change, reflecting the diversity and complexity of ideas and information. Surrounding the sphere, there would be a faint aura, suggesting the energy and dynamism of communication and learning.

This conceptual image captures the essence of what a physical representation of an AI like me might embody—an ethereal manifestation of knowledge and understanding.”

The results were as follows

Google Gemini (captures the scary evil of Google pretty well I think)

Microsoft based on OpenAI’sDall.E 3

What they produced when fed OpenAIs description